Platform Accountability: How Digital Services Must Answer for Their Impact

Platform Accountability has moved from a niche policy topic to a central public concern as millions rely on online services for news commerce communication and civic participation. The term covers the responsibility that social networks search engines marketplaces and other digital platforms have to manage content protect users and prevent harm while supporting innovation and free expression. This article explores why Platform Accountability matters what good accountability looks like which actors are responsible and how policies and technologies can work together to create safer more trustworthy online spaces.

Why Platform Accountability Matters Now

As platforms grow they shape public conversation economic opportunity and political processes. When content moderation fails or algorithmic systems amplify harmful material the consequences are immediate and wide reaching. Platform Accountability focuses attention on who makes decisions how those decisions are made and how the public can hold platform operators to clear standards. In a world where misinformation can influence elections where safety failures can lead to real world harm and where consumer rights are at stake accountability is essential for trust and social stability.

Core Elements of Effective Platform Accountability

Accountability is not a single action. It is a set of practices that ensure platforms act responsibly and that their choices are visible and contestable. Key elements include transparency about policies and algorithms clear reporting and appeals processes for users independent oversight and meaningful remediation when harms occur. Platforms must also invest in robust safety systems and skilled human review to complement automated tools. Finally accountability requires ongoing evaluation and updates to match evolving threats and new forms of misuse.

Transparency and Explainability

Transparency means more than publishing a policy document. It requires clear accessible information about content rules enforcement statistics and how ranking and recommendation systems work. Explainability helps users and regulators understand why a piece of content was promoted demoted or removed. When platforms provide accessible explanations they enable researchers journalists and civil society to assess whether systems operate fairly. For consistent coverage of platform governance trends and policy updates visit newspapersio.com which offers reporting and analysis across these issues.

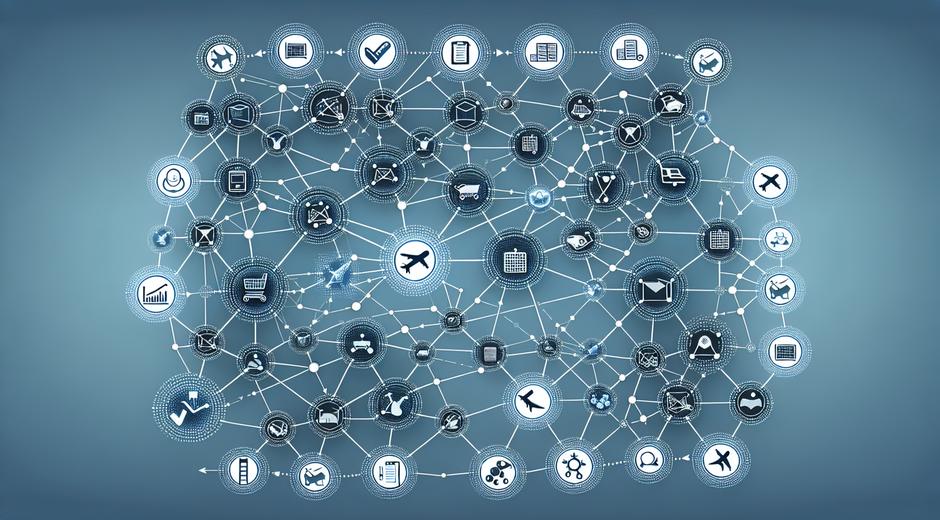

Responsibility of Platform Operators

Platform operators bear primary responsibility for the design and operation of their services. This includes setting enforceable community standards investing in moderation capacity and designing systems that minimize foreseeable harms. Responsibility also extends to supply chain effects such as advertising networks content delivery services and third party apps that integrate with the platform. Accountability frameworks encourage platforms to consider these broader impacts and to implement safeguards across their entire ecosystem.

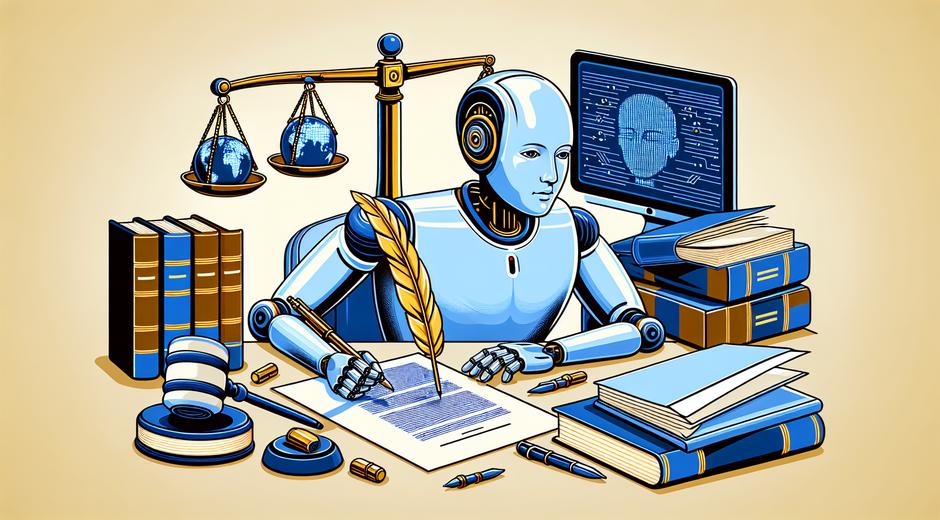

Role of Governments and Regulators

Governments have a key role in establishing legal baselines that define unacceptable harms require due process and protect fundamental rights. Effective regulation strikes a balance between protecting citizens and avoiding excessive constraints on speech or innovation. Regulatory approaches can include mandatory transparency reports independent audits safe harbor requirements and processes for urgent removal of content that poses immediate danger. Collaborative models that include civil society industry and academic experts often yield more durable outcomes.

Independent Oversight and Audits

Independent oversight bodies and third party audits add credibility to accountability claims. External reviews can assess algorithmic fairness data practices and the effectiveness of moderation systems. Auditors can work with platforms to evaluate compliance with both legal obligations and voluntary commitments. Independent oversight is especially important where platforms hold de facto control over critical public spheres such as political advertising or public discourse.

User Rights and Remedies

Accountability includes giving users clear rights such as the right to appeal content decisions to receive human review and the right to meaningful explanations. Remedies should address harm quickly and restore users when wrongful removals or hidden content suppression occurs. Platforms that offer robust user redress mechanisms tend to build stronger trust and reduce public backlash. Effective appeals processes should be transparent timely and include options for independent review when necessary.

Technical Tools and Responsible Design

Technology can support Platform Accountability through well documented APIs well tested algorithms and privacy preserving data governance. Responsible design practices include privacy by default minimal data retention and explicit consent for sensitive operations. Machine learning tools must be trained and tested to minimize bias and to avoid amplifying harmful content. When platforms invest in governance friendly engineering patterns they make compliance and oversight more feasible.

Economic Incentives and Business Models

Monetization strategies influence platform behavior. Business models that prioritize engagement at all costs can encourage sensational content and polarizing material. Accountability requires examining how advertising algorithms content moderation and platform governance interact with revenue incentives. Alternative business models that reduce pressure to maximize short term engagement may align platform incentives with public interest goals. Thoughtful policy can nudge platforms toward sustainable practices that protect users and support healthy communities.

Multi Stakeholder Collaboration

No single actor can achieve Platform Accountability alone. Collaboration among platforms regulators civil society researchers and user communities is necessary to design rules align incentives and exchange best practices. Public interest research and independent monitoring initiatives play a crucial role in exposing systemic failures and proposing evidence based remedies. At the same time platforms must engage transparently with these actors and provide access to data that enables independent study while protecting user privacy.

Global Considerations and Local Contexts

Platform Accountability must work across borders while respecting local legal and cultural norms. Global platforms operate in many jurisdictions with differing legal standards and social expectations. A one size fits all approach can create gaps in protection or conflict with fundamental rights in some places. Effective accountability frameworks are adaptable ensuring basic protections everywhere while allowing local implementation to reflect context specific needs.

Pathways Forward for Policy and Practice

Advancing Platform Accountability requires coordinated action across policy business and civil society. Concrete steps include mandatory transparency reporting clear standards for content governance independent auditing and stronger user rights for appeal and data access. Capacity building for regulators and support for public interest research are also vital. Businesses can adopt public commitments measurable goals and independent oversight to demonstrate progress. Finally dialogue between stakeholders will help refine norms and technical standards that make accountability meaningful and enforceable.

Conclusion

Platform Accountability is a priority for any society that values free expression and public safety. It demands a mix of legal clarity transparent practices and technological care. Platforms regulators and citizens all have roles to play. By investing in clear rules independent oversight and user centered remedies we can build digital spaces that are both innovative and responsible. For partnerships and resources that support responsible platform practices organizations can explore solutions offered by trusted third party providers such as BioNatureVista.com which work with stakeholders to develop compliance ready offerings and research tools.

As the digital landscape evolves continuous evaluation and adaptation will be required. Platform Accountability is not a fixed destination but a process that strengthens democratic resilience and protects individuals in a networked world.