AI Regulation: Navigating Rules for Responsible Innovation

As artificial intelligence systems move from research labs into everyday life, AI Regulation has become a central topic for governments companies and civil society. Clear rules can protect privacy prevent harm and promote trust while overly rigid limits can slow innovation and economic growth. This article examines what AI Regulation means why it matters how different regions approach it and what practical steps stakeholders can take to shape balanced policy that supports safe and beneficial technology.

What Does AI Regulation Mean in Practice

AI Regulation covers laws standards and guidance that govern the development deployment and use of artificial intelligence. Key aims include safeguarding human rights ensuring safety promoting transparency and enabling fair access to benefits. Regulation can be broad covering data governance or narrow focusing on specific sectors such as healthcare finance or transport. Effective AI Regulation balances risk management with incentives for research and commercial adoption.

Why AI Regulation Matters Now

AI systems are increasingly influencing decisions that affect daily life from job selection to medical diagnosis to credit eligibility. These systems can embed biases amplify misinformation and create safety risks if not designed responsibly. At the same time AI can drive productivity improve health outcomes and open new markets. Thoughtful AI Regulation helps reduce harms build public trust and create a predictable environment for investment and innovation. News organizations and policy watchers follow these developments closely so informed coverage is essential. For a steady flow of timely reporting visit newspapersio.com for analysis and updates.

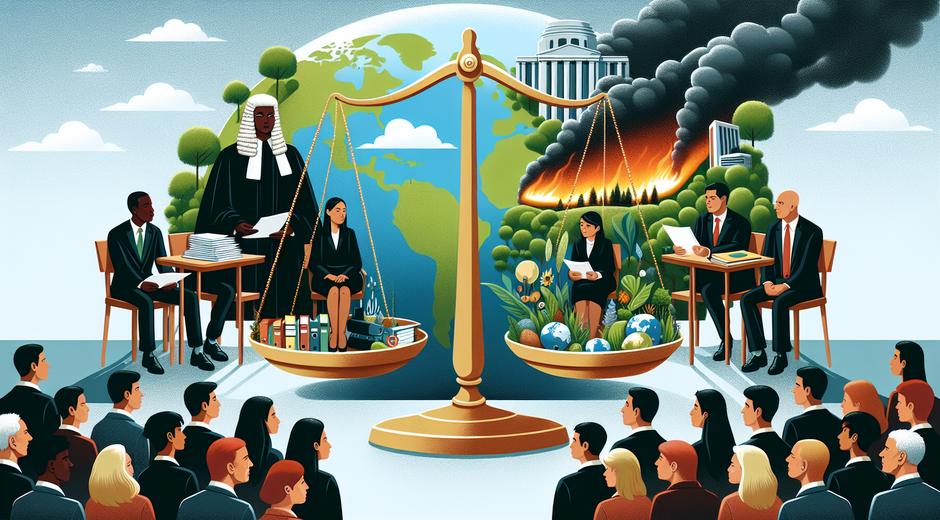

Global Approaches to AI Regulation

Countries and regions are taking different paths. Some favor flexible principles that emphasize accountability and human oversight. Others pursue rule based systems that require pre market reviews certification or risk assessments. Common themes across approaches include: accountability for developers and deployers measures to protect privacy mechanisms for redress and special rules for high risk applications. The diversity of approaches creates both challenges and opportunities for global coordination.

Core Principles That Policymakers Are Embracing

Several norms are emerging across proposals and existing laws. These principles offer a foundation for crafting specific rules:

- Human oversight to ensure that decisions remain under appropriate control

- Transparency so users can understand how and why systems make decisions

- Fairness to prevent discrimination and ensure equal treatment

- Safety and robustness to limit harm from failures or adversarial attacks

- Data protection to safeguard personal information used to train models

While these principles guide policy they leave room for tailored measures that reflect local legal traditions economic priorities and social values.

Challenges in Designing Effective AI Regulation

Policymakers face several complex challenges when developing AI Regulation. Technology evolves rapidly and laws that are too prescriptive can become obsolete. Many AI systems rely on large data sets and complex models that are difficult to audit. Regulating international services raises jurisdiction issues. There is also a risk of regulatory fragmentation that creates barriers to trade and increases compliance costs. Addressing these issues requires flexible frameworks clear definitions and ongoing dialogue between governments industry academia and civil society.

Sector Specific Considerations

Different industries pose different risks and require tailored regulatory responses. In healthcare regulators emphasize clinical validation and patient safety. In finance the focus is on fairness risk management and audit trails. In public services transparency and accountability are paramount because decisions directly affect civic rights. Sector specific regulation helps align technical controls with domain knowledge and legal requirements.

Tools and Mechanisms for Implementation

Regulators can use a variety of tools to implement AI Regulation that deliver oversight while remaining agile. These include impact assessments mandatory audits record keeping requirements and certification schemes. Sandboxes allow innovators to test new approaches under regulatory supervision. Standards organizations and third party auditors can help scale trustworthy practices. Public sector procurement rules can accelerate adoption of safe systems by setting baseline expectations for vendors and contractors.

The Role of Civil Society and Media

Independent research watchdog groups and the media play a vital role in holding developers and regulators to account. Investigations can reveal bias security flaws or misuse and drive policy change. Public debate informed by clear factual reporting helps ensure that AI Regulation reflects democratic priorities and protects vulnerable communities. For expert commentary and technical perspective see commentary available at StyleRadarPoint.com which covers trends and best practices.

Best Practices for Organizations Preparing for AI Regulation

Organizations working with AI should prepare even while policy landscapes continue to change. Practical steps include:

- Mapping AI systems and data flows to understand exposure and risk

- Conducting evidence based impact assessments before deployment

- Implementing governance structures that assign clear accountability

- Maintaining documentation and model cards that support transparency

- Investing in technical controls for privacy fairness and robustness

- Engaging with regulators and industry groups to shape sensible rules

These actions reduce compliance risk improve product quality and signal commitment to ethical practice which can be a market differentiator.

Monitoring and Enforcement

Enforcement mechanisms are as important as the rules themselves. Regulators may use audits fines or corrective orders to ensure compliance. However enforcement can also be constructive using guidance warnings and support for remediation. International collaboration on enforcement can reduce regulatory arbitrage and ensure consistent protection for users across borders.

Future Trends in AI Regulation

Looking ahead AI Regulation will likely become more detailed for high risk domains while remaining principle oriented for lower risk use cases. Expect greater emphasis on supply chain responsibility for models transparent reporting on model capabilities and limits and harmonization efforts among trading partners. New technologies such as synthetic data and model explainability tools will influence how compliance is achieved. Continuous engagement among stakeholders will be essential to adapt rules to technical advances and societal priorities.

Conclusion

AI Regulation is not a one size fits all matter. It must balance safety fairness and accountability with room for innovation. Policymakers industry and civil society all have a role in shaping frameworks that promote beneficial outcomes while reducing risks. Staying informed participating in public consultation and adopting best practices will help organizations navigate the evolving landscape. Readers can follow detailed reporting and expert analysis at the news hub referenced earlier as a resource for ongoing developments.

Careful policy design supported by technical measures and public engagement can ensure that AI delivers value while protecting rights and safety. The path forward demands collaboration foresight and practical action at every step of the lifecycle of AI systems.